MQA and DACs

It’s all about personal preferences

There are already many articles talking about MQA and also many debates around DACs supporting MQA. This is our attempt to take a simplified view of the topic, hence certain highly debatable points are ignored due to the lack of published technical clarifications.

Firstly, we take a look at MQA. In a nutshell, MQA tries to pack large audio data into a smaller sized file through their proprietary ‘folding’ process. The algorithm and level of compression remain a mystery except to those told, so let’s ignore the bit resolution portion for now. The compressed file should offer CD quality in a smaller package and can be played with equipment/software that doesn’t support MQA decoding. With MQA capable equipment/software, you could ‘unfold’ these files and potentially recover the initial high bit-rate master file.

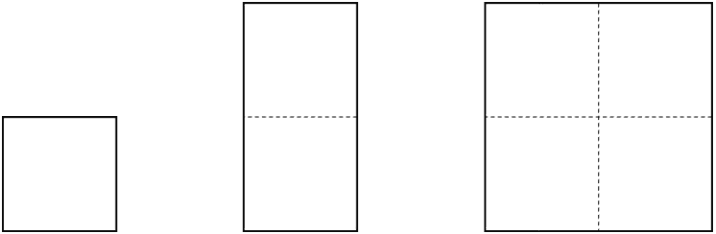

Let’s take an example of a 192k bit-rate MQA master. By simple calculation, one fold will take it down to 96k, and another will take it to the CD-quality 48k. Similarly, 176.4k MQA master will be folded twice to arrive at a 44.1k bit-rate file.

For a 384k or 352.8k bit-rate MQA master, they will have to go through another fold. From the MQA website, the first unfold is processed by a MQA Core Decoder. Subsequent unfolds go through a MQA Renderer. Why this is so may be due to both technical and non-technical reasons. As MQA Core Decoder can be implemented in software, users can enjoy Hi-Res quality from MQA files even without a MQA Renderer capable DAC. With such a DAC, further unfolds promises even higher quality with the ‘original’ master bit-rate. The reason why the MQA Renderer is not implemented in software was a technical limitation, and there’s no need to dig deeper here.

As many have asked, what’s the difference compared with up-sampling? Take a look at the diagram below.

In this case, instead of unfolding, the bit-rate is ‘multiplied’ to the desired higher bit-rate through multiple sampling process. If you’ve noticed, to get to the ‘same’ big square, you have to start with a larger small square. Most up-sampling is achieved through relatively straightforward weighted interpolation (i.e., adding the in-between bits), so it’s a less complicated process that has been fully implemented in various Audio manipulation and playback software besides pure hardware implementations. No complex 2 stage process here as well.

At first glance, it seems that if you are willing to live with a larger 44.1 or 48k source file, you can achieve the same result with up-sampling. But why do they still sound different? The argument on the MQA front would be that they are using the same known process for compressing the Masters and also for the extraction, so the result is more consistent. However, there’s also a good chance that a good approximation used in interpolation increases the data’s definition and results in a reasonable accuracy. Also, it is known that MQA discards ‘inaudible’ data during the folding.

Should one sound better than the other? I don’t think there’s a definitive answer, and it likely comes down to individual preferences.

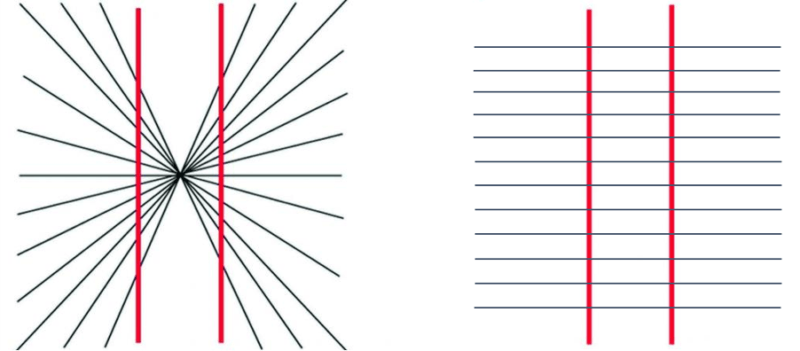

Take a look at the 2 diagrams below.

The above well-known optical illusion tells us that our mind can perceive things differently depending on the surrounding information. Hence losses from compression techniques or gains from ‘guesstimation’ may both attempt to arrive at mostly the same core information; a difference in the underlying process (background) can make them feel quite dissimilar. Hence, arguments of which approach is better to start, and listener preferences begin to develop. A strong indication of individual preferences has been further demonstrated when the listening test involves an actual Hi-Res recording where different listeners preferred different formats of the reproduction.

So, where does the DAC come into play? As the name implies, the job of a DAC is to perform digital to analog conversion at its core. The input section receives the sender’s data through a variety of digital inputs and feeds digital audio data to the DAC section. Hence, it becomes logical to have the MQA decoder/renderer built into the input section before the decoded digital audio is sent to the DAC. This may one day change if there is a meteoric rise in the adoption of MQA that DAC chip manufacturers and custom DAC designers have to integrate ‘native’ MQA decoding. Putting that scenario aside, let’s try to understand the necessity of a MQA capable DAC.

We already know that the MQA Renderer has to be implemented in hardware so there’s not much of a choice. But if you’re only interested in the first unfold, it could be done in software, feeding either an 88.2k or 96k bit-rate stream to any PCM capable DAC that can handle these two bit-rates. A regular multi-purpose PC may not be the best source due to noise and other interferences. Still, a purpose-built PC based transport for Tidal Master playback matched with a good non-MQA DAC might be better than a budget level MQA capable Streamer/DAC. As with various other software and hardware audio media formats already present, the quality of the media source (a.k.a. recording) and the playback equipment would likely weigh more than the technology itself.